Big scale data scraping has become a burning topic amongst the people having rising demands of big data. People have become hungry for scraping data from different websites to assist with business development. Though, many challenges like blocking mechanisms would rise while scaling up data scraping procedures, obstructing people from having data. Let’s go through the data extraction challenges of huge-scale data scraping in detail.

Big Scale Data Scraping Challenges

1. Bot Access

The initial thing you need to check is if your targeted website permits data extracting before starting it. In case, you get it cancels for extracting through its robots.txt, you could ask a web owner to scrape permission, clarifying your extraction purposes and requirements. In case, an owner still affects, it’s superior to get an optional website with similar data.

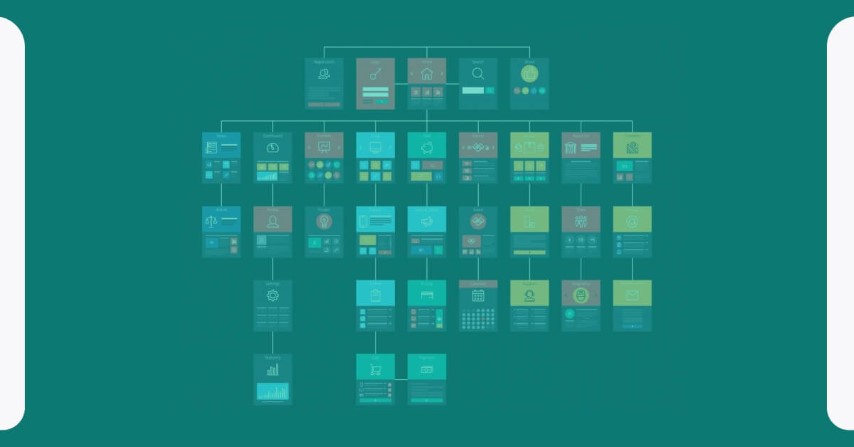

2. Complicated Page Structure

The majority of web pages rely on HTML. Web designers can get their standards for designing the pages, therefore page structures are extensively different. When you require to do big-scale web extraction, you have to create one data scraper for every website.

Furthermore, websites occasionally update content for improving user experiences or add newer features, leading to operational changes on a web page. As data scrapers are all set up as per certain page designs, they might not work for an updated page. So, at times even some minor changes in the targeted website need to adjust web data scraper.

3. IP Blocking

IP blocking is a public method of stopping data scrapers from accessing data of the website. It usually happens when a site detects high numbers of requests from a similar IP address. A site would either completely ban an IP or limit its access for breaking down the extraction procedure.

4. CAPTCHA

CAPTCHA (Completely Automated Public Turing test to tell Computers and Humans Apart) is generally used for separating humans from extraction tools through displaying images or consistent problems, which humans find easy for solving but data scrapers don’t.

A lot of CAPTCHA solvers could be implemented to bots to make sure non-stopping scraping. However, the technologies for overwhelmed CAPTCHA could help you need constant data feeds, they might still decelerate the big-scale data scraping procedure.

5. Honeypot Traps

Honeypot is the trap a website owner makes on a page for catching scrapers. The traps could be links, which are imperceptible to humans however visible to data scrapers. When a data scraper falls in a trap, a website could utilize the data it gets (e.g. IP address) for blocking that scraper.

6. Slower Website Loading Speed

Websites might react slowly or fail for loading while receiving so many access requests. It is not the problem while humans search a site, as they require to reload a web page as well as wait for a website to get recovered. However, extracting might be broken as a scraper does not understand how to cope with emergencies.

7. Dynamic Content

A lot of sites apply AJAX for updating dynamic content. Examples include infinite scrolling, lazy loading images, or more details by clicking the button using AJAX calls. This is convenient for the users to get more data about such types of websites however, not for data extractors.

8. Login Requirements

Certain protected data might need you to initially log in. After submitting the login credentials, a browser automatically adds cookie values to different requests you make for most websites, therefore a website knows that you’re the similar person that logged in previously. Therefore, when extracting websites needing a login, make sure that the cookies are sent using the requests.

9. Real-time Web Scraping

Real-time web scraping is important when comes to inventory tracking, pricing comparison, and more. The data could change in the blinking of an eye as well as might result in enormous capital gains for any business. The web scraper requires to monitor websites and extract data. Despite that, it still has delayed as the demanding as well as data delivery takes some time. Besides, acquiring a huge amount of data in real-time is a huge challenge, too.

Actowiz’s scheduled scraping can extract websites at minimum 5 minutes intervals to get real-time data scraping.

There would certainly get more challenges in data scraping in the future, however, the common principle for extracting is always similar: treat these websites pleasantly. Do not try and overload it. Besides, you can always get a data scraping tool or services like Actowiz to assist you to deal with the extraction job.